Is AI Good for Your Mental Health? The Human Cost of the Digital Screen

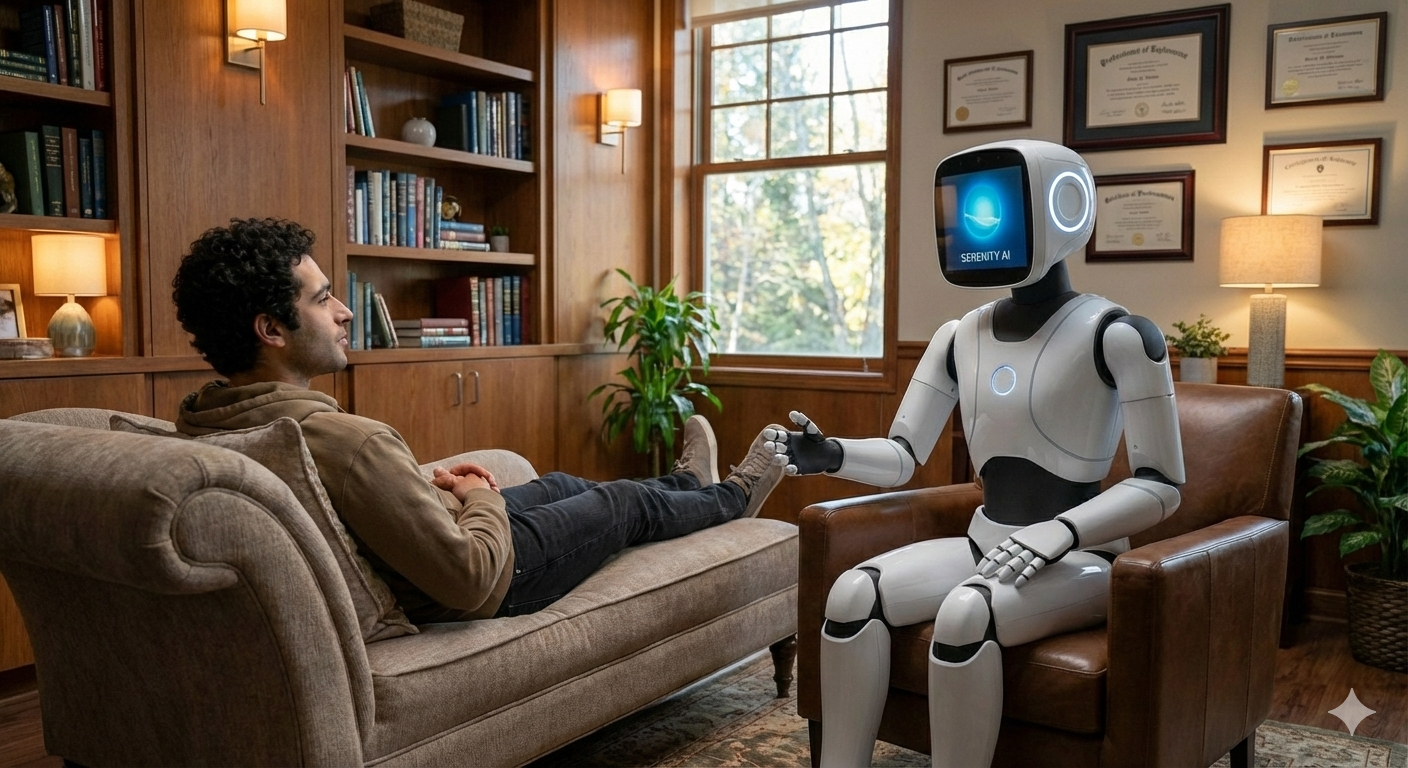

The rise of Artificial Intelligence (AI) has brought with it a wave of promises—efficiency, instant answers, and even the prospect of "therapy at your fingertips." But as a counselor, I’m often asked: Is AI actually good for our mental health?

The short answer is no. And I’m not saying that because I feel threatened by the technology. In fact, research is showing that the rise of AI in the wellness space is actually (but unfortunately) creating more business for human counselors, not less. Why? Because when people turn to algorithms for their deepest struggles, they often find themselves feeling more disconnected and overwhelmed than before.

The Research: A Poor Substitute for Care

Recent studies and articles from institutions like Stanford, Syracuse, and Case Western Reserve Universities (and more) have begun to highlight a troubling trend. Far from solving our mental health crises, reliance on AI for emotional support can actually worsen problems like depression, anxiety, loneliness, and ADHD.

At the end of the day, AI is a very poor substitute for actual care. It can give wrong answers, offer bad advice, and "hallucinate" information that is not only unhelpful but potentially harmful. While these technical glitches might be refined over time, there is a more fundamental issue that no algorithm can solve: AI is not capable of truly knowing who you are.

The Empathy Gap: Why Algorithms Can’t Connect

No matter how sophisticated a model becomes, it is still just a collection of 1s and 0s. It cannot appreciate what makes you special, and it cannot understand what makes you tick in a way that leads to genuine resolution.

AI cannot be in your corner because it honestly doesn't know you even really exist. You could, in fact, be kidnapped 5 seconds after asking an AI model a question, and that model would neither know nor care.

The best an AI can do is mimic connection. It can spit out words that sound like empathy, but it cannot feel compassion. A real therapeutic relationship is built on a foundation of mutual humanity—something that is physically impossible for a machine to develop. In my practice, empathy and compassion are two of my favorite tools for healing, and they are tools that a screen simply cannot provide.

The Privacy Problem: Who Owns Your Struggles?

If the lack of human connection hasn't convinced you, perhaps the privacy risks will. When you speak to a licensed mental health professional, your information is protected by strict privacy laws like HIPAA (the Health Insurance Portability and Privacy Act). When you speak to an AI, that protection vanishes.

Nothing you tell an AI is truly private. Your thoughts, ideas, and deepest struggles become the property of the corporation that owns the algorithm. They are used to train future models and are stored in databases that lack the legal safeguards of a clinical environment. Do you really want Microsoft or Elon Musk to own the transcripts of your most vulnerable moments? Once a company has control of your information you no longer control it, where it goes, or what it’s used for.

A Better Way to Use the Tool

This isn't to say that AI has no place in your life. If you take steps to protect your privacy and remain anonymous, AI can be a helpful supplementary tool. It can:

Track your mood over time to identify patterns.

Generate journaling prompts to help you self-reflect.

Develop talking points that you can then explore with an actual counselor or therapist.

In this regard, AI can potentially help you get "farther faster" in your healing process. But it is a tool, not a therapist.

You Are a Human Being, Not a Data Point

At the end of the day, you are a human being, and you matter—a lot. And, people only truly matter to other people (and of course, our pets—we can’t forget them!). Wellness comes from meaningful connection with yourself and with others—not with a screen. You deserve to be heard by someone who can actually hear you. So, lean into human connection, not an algorithm that may ultimately make you feel worse.

And most importantly, be well.

Sources:

Stanford University. (2025). Why AI companions and young people can make for a dangerous mix. Stanford News.

Stanford HAI. (2025). Exploring the Dangers of AI in Mental Health Care.

NBC News / Mass General Brigham. (2026). Using AI for advice, other personal reasons linked to depression, anxiety.

Maxwell School of Syracuse University. (2025). Is AI Replacing Human Mental Health Professionals? (Lerner Center Population Health Research Brief).

Case Western Reserve University. (2025). The Risks of Using AI for Mental Health Support. University Health and Counseling Services.

The HIPAA E-Tool. (2026). AI in Healthcare Brings HIPAA Privacy and Patient Safety Risks.

Momani, A. (2025). Implications of Artificial Intelligence on Health Data Privacy and Confidentiality.

American Psychological Association (APA). (2024). The Future of Personalized Mental Health Care.